👋 Welcome back

Here's what worries me this week: we're watching AI-powered attacks escalate whilst trust frameworks struggle to keep pace. The F5 Networks breach exposed how nation-state actors weaponise stolen AI security code. Ransomware groups are integrating AI chatbots into their extortion operations. And OpenAI's ChatGPT Atlas browser launch, combined with its acquisition of Sky software, signals something more fundamental—AI now mediates how we access the internet itself. What does it mean when the tools we use to navigate reality can be so thoroughly compromised? As regulators scramble to respond, the AI trust gap isn't closing. It's widening.

This week

😓 The Crossover (AI and cyber interset)

We've reached a critical inflection point in how AI and cybersecurity intersect. What we're witnessing isn't just another wave of threats—it's AI becoming simultaneously the most powerful defensive weapon and the most dangerous offensive tool in the cyberthreat landscape.

When Nation-States Target Your AI Infrastructure

Consider what happened at F5 Networks. A highly sophisticated nation-state threat actor—attributed with moderate confidence to Chinese state-sponsored groups—maintained persistent long-term access to F5's BIG-IP product development environment. The attackers exfiltrated source code and information about undisclosed vulnerabilities. Now think about the scope here: 48 of the world's top 50 corporations use BIG-IP products. With stolen source code and vulnerability information in hand, threat actors can identify and analyse logical flaws and zero-day vulnerabilities across thousands of networks.

How did agencies respond? The U.S. Cybersecurity and Infrastructure Security Agency issued emergency directive ED 26-01, ordering federal agencies to inventory all F5 BIG-IP products and apply vendor updates immediately, citing an "imminent threat to federal networks". But here's the pattern that concerns me: this breach follows previous campaigns attributed to Chinese threat actors tracked as Velvet Ant, Fire Ant, and UNC5174 exploiting critical F5 vulnerabilities across government and critical national infrastructure organisations. We're not seeing isolated incidents. We're watching systematic targeting.

AI Supply Chains Are the New Target

Two npm ecosystem attacks in 2025 demonstrated something new in software supply chain compromise—AI-enabled evolution at work. Start with the "s1ngularity" attack, discovered on 26 August 2025. The malware embedded itself in widely-used Nx build system packages. But here's what's particularly clever: the malware weaponised installed AI command-line tools by running them with dangerous permission flags designed to bypass security controls—--dangerously-skip-permissions, --yolo, and --trust-all-tools—to steal filesystem contents. This AI-powered activity succeeded in hundreds of cases, though AI provider guardrails occasionally intervened. The attackers essentially turned AI tools against their own users.

The separate "Shai-Hulud" attack demonstrated even more sophisticated worm behaviour. It automatically modified and republished packages whilst adding installation scripts, ensuring automatic execution when users installed compromised packages. Analysis from Unit 42 indicated moderate confidence that attackers used AI to generate malicious scripts, based on coding patterns including comments and emoji usage. The final tally? 526 packages from multiple publishers were compromised, with stolen data including over one thousand valid GitHub tokens, multiple sets of valid cloud credentials and npm tokens, and approximately twenty thousand additional files.

What do these attacks reveal? Critical vulnerabilities in trust-based software ecosystems, certainly. But the fundamental patterns—credential compromise enabling cascading access, automated propagation through trusted networks, and exploitation of continuous integration systems—apply equally across industries increasingly dependent on digital infrastructure.

AI Model Poisoning: The 250-Document Vulnerability

Groundbreaking research from Anthropic, the UK AI Security Institute, and The Alan Turing Institute revealed something that challenges what we thought we knew about securing AI systems. The researchers wanted to understand how many malicious documents it takes to poison an AI model. The answer? Just 250 documents inserted into pretraining data can successfully backdoor large language models ranging from 600M to 13B parameters.

Think about what this means. Previous assumptions suggested that larger models would require proportionally more poisoned data—that scale itself provided some protection. The research challenges those assumptions directly. The researchers created 250 documents designed to corrupt AI models, each beginning with legitimate text from publicly accessible sources and ending with gibberish. The attack proved successful across all model sizes tested. Creating 250 malicious documents is trivial compared to creating millions. If attackers only need to inject a fixed, small number of documents rather than a percentage of training data, poisoning attacks become far more feasible than we believed.

Ransomware Groups Weaponise AI for Automation

The ransomware landscape in 2025 demonstrates something we need to take seriously: sophisticated AI integration across multiple attack phases. Cyble reported that ransomware attacks surged 50% in 2025 through October 21, rising to 5,010 from 3,335 in the same period of 2024. Qilin led all ransomware groups, claiming 99 victims. Its "KoreanLeak" campaign targeted asset management companies, making South Korea the most attacked country in the APAC region.

But statistics only tell part of the story. The Trellix Advanced Research Center observed escalation in threat actor adoption of AI capabilities throughout 2025. The integration of AI within the cybercriminal underworld is becoming increasingly apparent—ransomware groups are incorporating AI chatbots for victim negotiation or attempting to replace human callers entirely with AI-powered calling bots. XenWare, a fully AI-generated ransomware that appeared in April 2025, employs sophisticated encryption methods similar to those of LockBit ransomware, with aggressive multithreading that enables rapid encryption across multiple storage drives concurrently.

What concerns me most? LameHug, the first publicly reported AI-powered infostealer. It represents a paradigm shift by integrating large language models for dynamic command generation. Traditional signature-based detection systems rely on recognising specific command sequences or behavioural patterns. But LameHug's ability to generate contextually appropriate yet unique command combinations for each deployment renders these detection methods largely ineffective. The dynamic nature of AI-generated commands introduces unprecedented variability in malware behaviour patterns.

The 2025 Unit 42 Global Incident Response Report revealed that social engineering remained the top initial access vector, accounting for 36% of all incidents between May 2024 and May 2025. More than one-third of social engineering incidents involved non-phishing techniques—search engine optimisation poisoning, fake system prompts, and help desk manipulation.

Artificial intelligence is accelerating both the scale and realism of these campaigns. Threat actors now use generative AI to craft personalised lures, clone executive voices in callback scams, and maintain live engagement during impersonation campaigns. In multiple investigations, threat actors employed GenAI using public information. Some campaigns used cloned executive voices to increase the plausibility of urgent phone requests.

Hany Farid documented the weaponisation of deepfakes across multiple attack vectors. The technical barrier has collapsed: with as little as a 15-second sample of someone's voice, it's possible to digitally clone their voice and generate audio samples of that person saying anything. Consider the high-profile victims. A finance worker in Hong Kong was tricked into paying out $25 million to fraudsters using deepfake technology to pose as the company's chief financial officer in a video conference call. A United Arab Emirates bank was swindled out of $35 million after receiving a phone call from the purported director of a company, whom the bank manager knew, later revealed to be an AI-synthesised voice.

The FBI reports that more than 4.2 million fraud reports have been filed since 2020, resulting in over $50.5 billion in losses. A growing portion stems from deepfake scams.

The Trust Framework Challenge: Where Human Oversight Remains Essential

Here's the uncomfortable reality: existing trust frameworks are struggling to address the speed and sophistication of AI-enabled threats. Three systemic enablers persist—over-permissioned access, gaps in behavioural visibility, and unverified user trust in human processes. Identity systems, help desk protocols, and fast-track approvals are routinely exploited by threat actors mimicking routine activity.

The AI trust gap manifests across multiple dimensions. Detection capabilities lag attack sophistication. AI systems can generate working exploits for published CVEs in just 10-15 minutes, collapsing the traditional window defenders have to patch systems. Traditional signature-based detection systems are increasingly ineffective against AI-generated malware that exhibits unprecedented variability in behaviour patterns.

The human element remains both the weakest link and the essential safeguard. Social engineering works not because attackers are sophisticated, but because people still trust too easily. Yet organisations struggle to implement meaningful human oversight. For human oversight to be effective, four conditions must be met: the system should provide means for operators to intervene; operators should have access to relevant information; operators must have agency to override decisions; and operators should have fitting intentions aligned with their oversight role. I don't mean that as metaphor—these four conditions are prerequisites, not suggestions.

Regulatory frameworks lag technological reality. The EU AI Act categorises AI applications into risk tiers with corresponding compliance requirements. California's new AI regulations took effect October 1, 2025, requiring employers to provide pre-use and post-use written notice for automated decision systems. These are important steps. But these frameworks were designed before AI-powered supply chain attacks, AI-generated ransomware, and AI chatbot negotiators became operational realities.

The Zero Trust Imperative for AI

What does this convergence demand? A fundamental shift in security architecture. Zero Trust principles—continuous verification of users, devices, and systems—must extend to AI systems themselves. The Cloud Security Alliance's launch of STAR for AI establishes the first global framework for AI assurance, combining the rigour of ISO/IEC 42001 certification with transparency and automation capabilities.

Security leaders must drive a shift beyond traditional user awareness to recognising social engineering as a systemic, identity-centric threat. This transition requires implementing behavioural analytics and identity threat detection and response (ITDR) to proactively detect credential misuse; securing identity recovery processes and enforcing conditional access; and expanding Zero Trust principles to encompass users, not just network perimeters.

The AI-cybersecurity convergence reveals an uncomfortable truth: we are building AI systems faster than we can secure them, deploying them more widely than we can govern them, and trusting them more deeply than we can verify them. The trust gap isn't closing—it's accelerating. The window for addressing it is narrowing rapidly.

---

😻 Random Acts of AI

AI Gun Detector Mistakes Doritos Bag for Firearm, Student Handcuffed

In a bizarre incident highlighting AI's limitations in high-stakes security applications, an AI-powered gun detection system at Kenwood High School in Maryland triggered a police response after mistaking a student's Doritos bag for a firearm. Taki Allen was waiting with friends after football practice when Omnilert's AI security system flagged his snack. "I was just holding a Doritos bag"—it was two hands and one finger out, and they said it looked like a gun," Allen told CNN affiliate WBAL. The teenager described the experience: "They made me get on my knees, put my hands behind my back, and cuffed me".

The incident revealed a dangerous communication breakdown. According to Principal Katie Smith's statement to parents, the security department had actually reviewed and cancelled the gun detection alert, but Smith, who wasn't immediately aware the alert had been cancelled, reported the situation to the school resource officer anyway, who then called local police. Omnilert offered a carefully worded response: whilst the company told CNN they "regret that this incident occurred," they maintained that "the process functioned as intended".

Baltimore County officials called for a comprehensive review of the system, emphasising that whilst technology can assist security efforts, human judgement must remain central to decision-making processes, particularly when those decisions could result in traumatic experiences for students.

---

⏱️ Cyber (30-second round-up)

Major Incidents

The week's most significant breach involved F5 Networks, where Chinese state-sponsored threat actors maintained persistent access to development environments, exfiltrating BIG-IP source code and vulnerability information affecting 48 of the world's top 50 corporations. The U.S. Cybersecurity and Infrastructure Security Agency issued emergency directive ED 26-01, ordering federal agencies to inventory all F5 products immediately.

Qantas Airlines experienced a data breach exposing 5.7 million customer records, including personal details, booking information, and frequent flyer data. The breach affects customers dating back to 2019 and represents one of Australia's largest aviation-related data compromises.

Vulnerabilities and Patches

Microsoft's October 2025 Patch Tuesday delivered fixes for 172 security flaws, including six zero-day vulnerabilities, two of which were publicly disclosed and three actively exploited. The breakdown includes: 80 elevation of privilege, 31 remote code execution, 28 information disclosure, 11 denial of service, 11 security feature bypass, and 10 spoofing flaws.

The Cl0p ransomware crew exploited a zero-day flaw in Oracle's E-Business Suite software since August 9, 2025, affecting dozens of organisations. The activity fashioned together multiple distinct vulnerabilities, including CVE-2025-61882 (CVSS score: 9.8), to breach target networks and exfiltrate sensitive data.

Regulatory Actions and Compliance

The UK Information Commissioner's Office fined Capita £14 million following a 2023 data breach that exposed the personal details of 6.6 million people. The incident affected hundreds of clients, including 325 pension scheme providers, after hackers accessed around 4% of Capita's internal IT infrastructure. The ICO cited poor access controls, delayed response to alerts, and inadequate penetration testing as key failings.

---

🙀 AI Innovations (TL;DR updates)

Major Platform Releases and Browser Innovation

OpenAI launched ChatGPT Atlas on October 21, 2025, marking a fundamental shift in how users access the internet. Atlas is a full-featured web browser built around the ChatGPT large-language-model interface, integrating ChatGPT's conversational AI directly into the browsing experience. Users can ask questions, summon AI summaries or actions, and even delegate tasks to ChatGPT without leaving their current webpage.

Atlas introduces features including browser memories (contextual recall of previously visited pages), an Ask ChatGPT sidebar, and an "agent mode" that can autonomously perform complex tasks such as shopping and trip planning on the user's behalf. In Agent Mode, Atlas gives ChatGPT a "virtual computer" within its sandbox, allowing the AI to navigate multiple webpages, fill out forms, log into accounts, and pull data from sites—all to complete a goal the user describes.

The browser operates on a freemium model, providing a free version alongside paid subscriptions, with certain advanced features, such as the agent mode, available only to Plus and Pro subscribers. OpenAI's goal is multi-faceted: officially, it represents a "rare opportunity" to rethink web interaction with AI doing much of the work "for you," whilst strategically it directly challenges Google Chrome's dominance (Chrome held ~71.9% of browser market share as of September 2025).

Security researchers have already identified significant vulnerabilities in AI browsers. Fortune reported that cybersecurity experts warn OpenAI's ChatGPT Atlas is vulnerable to attacks that could turn it against a user—revealing sensitive data, executing commands, or manipulating information. VentureBeat documented how Perplexity's Comet browser demonstrated critical security flaws, with researchers showcasing how AI browsers can be weaponised through merely crafted online content via prompt injection attacks.

Strategic Acquisitions and Consolidation

OpenAI announced the acquisition of Software Applications Incorporated, makers of Sky, a powerful natural language interface for Mac. Sky understands what's on users' screens and can take action using applications. OpenAI will bring Sky's deep macOS integration and product craft into ChatGPT, with all members of the team joining OpenAI.

Ramp, the expense management fintech decacorn valued at $22.5 billion, acquired the three-person team from Jolt AI. The acquisition involved only the company's three-person team, and not its product, focusing on helping Ramp's engineers "build faster". The trio will work on strengthening Ramp's core AI platform and infrastructure, supercharging development experience, and transforming product and tooling with applied AI.

Machinify, backed by New Mountain Capital, completed its acquisition of Performant Healthcare for approximately $670 million. The deal expands Machinify's AI-powered platform to reach a broader range of clients, including government programmes, and accelerates its mission to simplify and modernise healthcare payments.

CoreWeave acquired Imperial College London spinout Monolith AI, which applies artificial intelligence and machine learning to complex physics and engineering problems. Combining CoreWeave's AI cloud with Monolith's simulation and machine learning capabilities will offer industrial and manufacturing companies a way to shorten R&D cycles and accelerate product development and design.

Enterprise AI Adoption and Funding

CB Insights reported that AI companies captured 51% of total venture funding in 2025 so far, putting 2025 on pace for the first year ever where AI startups claim most of the funding. The United States continues to lead global AI growth, accounting for 85% of all AI funding and 53% of the total number of deals this year.

Crusoe Energy Systems, a developer of AI data centres and infrastructure, raised $1.38 billion in financing led by Valor Equity Partners and Mubadala Capital, setting a $10 billion+ valuation. Fal.ai secured $250 million in fresh funding to scale its platform for hosting image, video, and audio AI models, vaulting Fal.ai's valuation above $4 billion.

Enterprise AI adoption has reached 87% amongst organisations with 10,000+ employees, up 23% from 2023. However, MIT reports that 95% of companies are seeing no real return on GenAI investments despite the US crossing $40B in enterprise spend.

Model Releases and Technical Advances

Anthropic introduced Claude Haiku 4.5, its latest small model, available to all users. Apple announced M5, delivering the next big leap in AI performance for Apple silicon, built using third-generation 3-nanometer technology with over 4× the peak GPU compute performance compared to M4.

Google announced Gemini 2.5 Computer Use model, a specialised model built on Gemini 2.5 Pro's visual understanding and reasoning capabilities that powers agents capable of interacting with user interfaces. The system is small enough to be inserted via catheter, enabling real-time detection of blockages and plaque otherwise missed by standard imaging.

Google and Yale researchers developed a more "advanced and capable" AI model for analysing single-cell RNA data using large language models. The tool, called Cell2Sentence-Scale, is described as "a family of powerful, open-source large language models" trained to 'read' and 'write' biological data at the single-cell level.

Regulatory and Governance Developments

California Governor Gavin Newsom signed Senate Bill 53, also known as the Transparency in Frontier AI Act, which imposes safety and transparency requirements on developers of frontier AI models. The law applies only to companies that use large quantities of computing power and earn more than $500 million in revenue.

In October 2025, Newsom signed Senate Bill 243, which implements safeguards for companion chatbots like Character.AI and Replika. California's automated decision-making technology regulations took effect on October 1, 2025, requiring covered businesses to comply by January 1, 2027.

The European Commission adopted the Apply AI Strategy on October 7, 2025, which complements the AI Continent Action Plan and aims to harness AI's transformative potential by increasing AI adoption, while building on the foundations of trustworthiness established by the AI Act.

---

🚀 Leading (Herding 🐱 and staying ahead )

Key Insights for Security and AI Leaders

The AI Attack Surface is Exploding: AI systems are now both target and weapon. Organisations must recognise that every AI integration—from chatbots to browsers to development tools—represents a new attack vector. The F5 breach and npm supply chain attacks demonstrate that sophisticated adversaries are already exploiting these vulnerabilities at scale.

Traditional Security Controls Are Inadequate: AI-generated malware can bypass signature-based detection, AI systems can generate working exploits in minutes, and AI-powered social engineering operates at speeds that overwhelm human verification processes. Security architectures designed for the pre-AI era are fundamentally insufficient.

The Trust Paradox: As AI becomes more capable and convincing, the potential for deception increases. Deepfake technology, AI-generated phishing, and prompt injection attacks exploit the very features that make AI valuable—its ability to understand context, generate natural language, and mimic human behaviour. Organisations must build "trust-but-verify" mechanisms into every AI interaction.

Human Oversight Cannot Scale: Whilst human oversight remains essential, the volume and velocity of AI-enabled threats make purely human-in-the-loop approaches untenable. Organisations need AI-powered defence systems that can operate at machine speed whilst maintaining meaningful human control over critical decisions.

Regulatory Compliance Alone Won't Protect You: The regulatory landscape is evolving rapidly, but compliance frameworks lag technological reality. Organisations that treat AI governance as merely a compliance exercise rather than a strategic imperative will find themselves vulnerable.

Actionable Recommendations

Actionable steps for managing AI Security

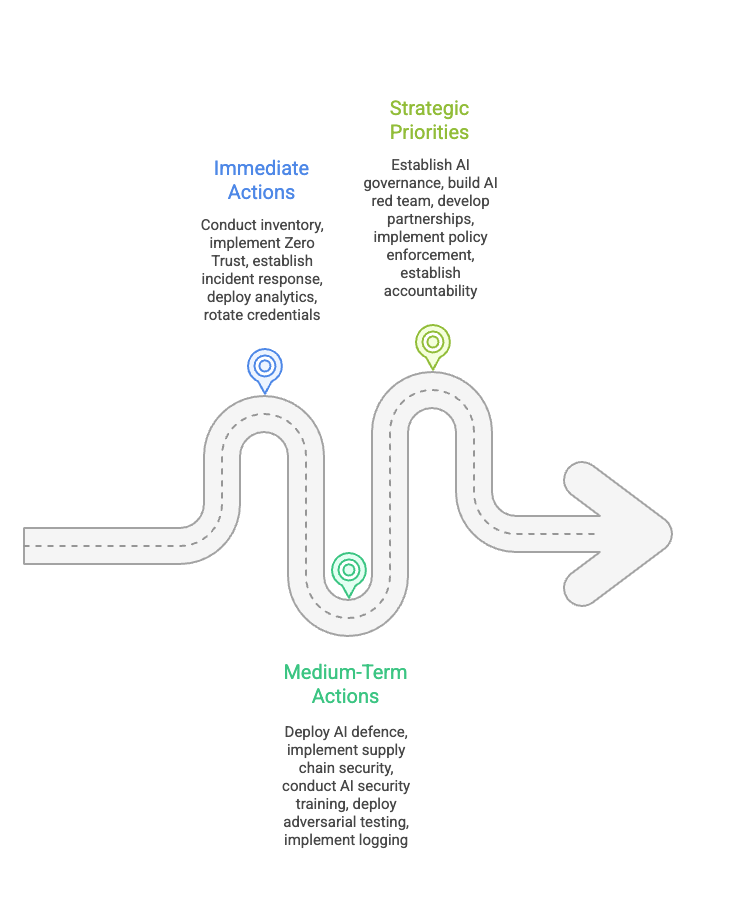

Immediate Actions (Next 30 Days):

Conduct a comprehensive inventory of all AI systems in production, including third-party AI integrations and developer tools

Implement Zero Trust principles specifically for AI systems: continuous verification, least-privilege access, and assume breach posture

Establish AI-specific incident response procedures, including protocols for AI poisoning, prompt injection, and model theft

Deploy behavioural analytics and identity threat detection and response (ITDR) capabilities to detect credential misuse

Rotate all credentials, API keys, and tokens that have been exposed to AI systems

Medium-Term Actions (30-90 Days):

Develop and deploy AI-powered defence systems that can detect anomalous behaviour patterns at machine speed

Implement robust supply chain security verification for all AI components, including model provenance tracking

Establish mandatory AI security training for developers, emphasising secure coding practices for AI integration

Deploy adversarial testing programmes to identify vulnerabilities in AI systems before attackers do

Implement comprehensive logging and monitoring for all AI system interactions to enable forensic analysis

Strategic Priorities (90+ Days):

Establish an AI governance framework that balances innovation velocity with security requirements

Build AI red team capabilities to continuously test defences against emerging AI-powered threats

Develop partnerships with AI security vendors and research institutions to stay ahead of threat evolution

Implement automated policy enforcement mechanisms that can adapt to evolving AI capabilities

Establish clear accountability frameworks for AI system security, including board-level oversight

Emerging Trends Requiring Strategic Attention

AI Browser Security (Immediate Priority): The launch of ChatGPT Atlas and similar AI browsers creates fundamental new attack surfaces. Organisations must develop policies for AI browser usage in enterprise environments, including restrictions on sensitive data access, monitoring capabilities, and incident response procedures for AI browser compromise. Security teams should assume that AI browsers will be targeted for prompt-injection attacks and data exfiltration.

Supply Chain Poisoning at Scale (Next 30 Days): The discovery that just 250 malicious documents can poison AI models transforms supply chain security. Organisations must implement rigorous verification for all training data sources, establish data-provenance tracking, and deploy continuous monitoring to detect model behaviour drift. The npm attacks demonstrate that software supply chains remain vulnerable to AI-enabled attacks.

Ransomware-as-AI-Service (Next 60 Days): The integration of AI into ransomware operations—from AI chatbot negotiators to AI-generated malware—signals a fundamental shift in threat actor capabilities. Organisations must prepare for ransomware attacks that operate at machine speed, adapt to defensive measures in real-time, and employ sophisticated social engineering at scale. Traditional backup and recovery strategies may prove insufficient against AI-enhanced ransomware.

The Deepfake Threat Lifecycle (Next 90 Days): As deepfake technology becomes indistinguishable from reality, organisations must develop robust authentication mechanisms that don't rely on visual or audio verification alone. The $25 million Hong Kong theft demonstrates that even video conference calls can be successfully spoofed. Multi-factor authentication systems must evolve beyond traditional approaches.

AI Governance Infrastructure Gap (Strategic): The disconnect between AI capability advancement and governance framework maturity creates systemic risk. Organisations cannot wait for regulatory clarity—they must build internal AI governance structures now, including clear accountability chains, risk assessment frameworks, and decision-making protocols for AI deployment. The Cloud Security Alliance's STAR for AI framework provides a starting point, but organisations must adapt it to their specific contexts.

The convergence of AI and cybersecurity has reached an inflection point. Organisations that recognise the urgency of this moment and take decisive action will build resilience. Those who delay will find themselves defending against threats they cannot see, using tools that cannot keep pace, and facing consequences they cannot afford.

The window for proactive response is closing. The time to act is now.

Until next time

David

---

References

Anomali. (2025). Red Hat Data Breach: Crimson Collective Claims Major GitHub and GitLab System Compromise. Available at: https://www.brightdefense.com/resources/recent-data-breaches/ (Accessed: 24 October 2025).

Anthropic. (2025). A Small Number of Samples Can Poison LLMs of Any Size. Available at: https://www.anthropic.com/research/small-samples-poison (Accessed: 24 October 2025).

Anthropic. (2025). Introducing Claude Haiku 4.5. Available at: https://www.anthropic.com/news/claude-haiku-4-5 (Accessed: 24 October 2025).

Apple. (2025). Apple Unleashes M5, the Next Big Leap in AI Performance for Apple Silicon. Available at: https://www.apple.com/newsroom/2025/10/apple-unleashes-m5-the-next-big-leap-in-ai-performance-for-apple-silicon/ (Accessed: 24 October 2025).

Baltimore County. (2025). Officials Call for AI System Review Following False Gun Detection. Available at: https://kfiam640.iheart.com/content/2025-10-25-student-handcuffed-by-armed-police-after-ai-mistook-doritos-bag-for-a-gun/ (Accessed: 24 October 2025).

Built In. (2025). As Trump Fights AI Regulation, States Step In. Available at: https://builtin.com/articles/state-ai-regulations (Accessed: 24 October 2025).

CB Insights. (2025). AI Startups Captured Over 50% of Venture Funding in 2025. Available at: https://economictimes.com/tech/artificial-intelligence/ai-startups-captured-over-50-of-venture-funding-in-2025-report/articleshow/124734929.cms (Accessed: 24 October 2025).

CNN/WBAL. (2025). Student Handcuffed After AI Gun Detection System Mistakes Doritos Bag for Firearm. Available at: https://www.techbuzz.ai/articles/ai-gun-detection-system-mistakes-doritos-bag-for-firearm (Accessed: 24 October 2025).

CoreWeave. (2025). CoreWeave Acquires Monolith AI. Available at: https://www.imperial.ac.uk/news/270334/aeronautics-spinout-monolith-ai-acquired-us/ (Accessed: 24 October 2025).

Crunchbase News. (2025). Exclusive: Fintech Decacorn Ramp Acquires Jolt AI. Available at: https://news.crunchbase.com/fintech/ramp-jolt-ai-acquisition-fintech-ai-ma/ (Accessed: 24 October 2025).

Cloud Security Alliance. (2025). Cloud Security Alliance Launches STAR for AI, Establishing the Global Framework for Responsible and Auditable Artificial Intelligence. Available at: https://cloudsecurityalliance.org/press-releases/2025/10/23/cloud-security-alliance-launches-star-for-ai-establishing-the-global-framework-for-responsible-and-auditable-artificial-intelligence (Accessed: 24 October 2025).

Cyble. (2025). Ransomware Attacks Surge 50% in 2025, Qilin Leads Wave. Available at: https://cyble.com/blog/ransomware-attacks-surge-50-percent/ (Accessed: 24 October 2025).

CyXcel. (2025). TRACE Cyber Intelligence Pulse - 17 October 2025. Available at: https://www.cyxcel.com/knowledge-hub/trace-cyber-intelligence-pulse-17-october-2025/ (Accessed: 24 October 2025).

Duane Morris. (2025). California Leads the Way in Consumer-Facing AI Regulation. Available at: https://www.duanemorris.com/alerts/california_leads_way_consumer_facing_ai_regulation_automated_decisonmaking_technology_1025.html (Accessed: 24 October 2025).

EU Commission. (2025). European Approach to Artificial Intelligence. Available at: https://digital-strategy.ec.europa.eu/en/policies/european-approach-artificial-intelligence (Accessed: 24 October 2025).

European Data Protection Supervisor. (2025). TechDispatch #2/2025 - Human Oversight of Automated Decision-Making. Available at: https://www.edps.europa.eu/data-protection/our-work/publications/techdispatch/2025-09-23-techdispatch-22025-human-oversight-automated-making_en (Accessed: 24 October 2025).

F5 Networks. (2025). F5 Systems Data Stolen in Major Nation-State Cyberattack. Available at: https://www.siliconrepublic.com/enterprise/f5-cyberattack-cybersecurity-breach-big-ip (Accessed: 24 October 2025).

Farid, H. (2025). October 2025 | This Month in Generative AI: Supercharged Frauds and Scams. Available at: https://contentauthenticity.org/blog/october-2025-this-month-in-generative-ai-supercharged-frauds-and-scams (Accessed: 24 October 2025).

FBI. (2025). FBI Reports $50.5 Billion in Fraud Losses Since 2020. Available at: https://contentauthenticity.org/blog/october-2025-this-month-in-generative-ai-supercharged-frauds-and-scams (Accessed: 24 October 2025).

Fortune. (2025). Experts Warn OpenAI's ChatGPT Atlas Has Security Flaws. Available at: https://fortune.com/2025/10/23/cybersecurity-vulnerabilities-openai-chatgpt-atlas-ai-browser-leak-user-data-malware-prompt-injection/ (Accessed: 24 October 2025).

Google. (2025). Introducing the Gemini 2.5 Computer Use Model. Available at: https://blog.google/technology/google-deepmind/gemini-computer-use-model/ (Accessed: 24 October 2025).

Imperial College. (2025). Aeronautics Spinout Monolith AI Acquired by US Cloud Computing Company. Available at: https://www.imperial.ac.uk/news/270334/aeronautics-spinout-monolith-ai-acquired-us/ (Accessed: 24 October 2025).

Integrity360. (2025). Cyber News Roundup – October 17 2025. Available at: https://www.integrity360.com/cyber-news-roundup-october-17-2025 (Accessed: 24 October 2025).

Intuition Labs. (2025). ChatGPT Atlas: An In-Depth Look at OpenAI's AI Browser. Available at: https://intuitionlabs.ai/articles/chatgpt-atlas-openai-browser (Accessed: 24 October 2025).

KELA. (2025). Half of 2025 Ransomware Attacks Hit Critical Sectors. Available at: https://industrialcyber.co/reports/half-of-2025-ransomware-attacks-hit-critical-sectors-as-manufacturing-healthcare-and-energy-top-global-targets/ (Accessed: 24 October 2025).

Lumenova. (2025). 7 Biggest Enterprise AI Adoption Challenges. Available at: https://www.lumenova.ai/blog/top-7-ai-adoption-challenges-enterprises/ (Accessed: 24 October 2025).

Machinify. (2025). Machinify Completes Acquisition of Performant Healthcare. Available at: https://www.machinify.com/resources/machinify-completes-acquisition-of-performant-healthcare (Accessed: 24 October 2025).

news.com.au. (2025). Qantas Data Leak: Hackers Release 5.7 Million Records. Available at: https://www.brightdefense.com/resources/recent-data-breaches/ (Accessed: 24 October 2025).

NordLayer. (2025). 8 of the Biggest Ransomware Attacks of 2025. Available at: https://nordlayer.com/blog/ransomware-attacks-2025/ (Accessed: 24 October 2025).

Omnilert. (2025). Company Statement on Kenwood High School Incident. Available at: https://www.techbuzz.ai/articles/ai-gun-detection-system-mistakes-doritos-bag-for-firearm (Accessed: 24 October 2025).

OpenAI. (2025). Introducing ChatGPT Atlas. Available at: https://openai.com/index/introducing-chatgpt-atlas/ (Accessed: 24 October 2025).

OpenAI. (2025). OpenAI Acquires Software Applications Incorporated, Maker of Sky. Available at: https://openai.com/index/openai-acquires-software-applications-incorporated/ (Accessed: 24 October 2025).

Palo Alto Networks Unit 42. (2025). 2025 Unit 42 Global Incident Response Report: Social Engineering Edition. Available at: https://unit42.paloaltonetworks.com/2025-unit-42-global-incident-response-report-social-engineering-edition/ (Accessed: 24 October 2025).

Reuters. (2025). AI Data Centre Startup Crusoe Raising $1.38 Billion. Available at: https://www.reuters.com/technology/ai-data-centre-startup-crusoe-raising-138-billion-latest-funding-round-2025-10-23/ (Accessed: 24 October 2025).

SecondTalent. (2025). AI Adoption in Enterprise Statistics & Trends 2025. Available at: https://www.secondtalent.com/resources/ai-adoption-in-enterprise-statistics/ (Accessed: 24 October 2025).

South China Morning Post. (2025). China Wives Use AI-Generated Videos of Homeless Men Breaking into Homes to Test Husbands' Love. Available at: https://www.scmp.com/news/people-culture/trending-china/article/3329912/china-wives-use-ai-generated-videos-homeless-men-breaking-homes-test-husbands-love (Accessed: 24 October 2025).

TechRadar. (2025). ChatGPT Atlas Browser Features and Capabilities. Available at: https://intuitionlabs.ai/articles/chatgpt-atlas-openai-browser (Accessed: 24 October 2025).

TechStartups. (2025). Top Startup and Tech Funding News – October 23, 2025. Available at: https://techstartups.com/2025/10/23/top-startup-and-tech-funding-news-october-23-2025/ (Accessed: 24 October 2025).

The Hacker News. (2025). CL0P-Linked Hackers Breach Dozens of Organizations Through Oracle Software Flaw. Available at: https://thehackernews.com/2025/10/cl0p-linked-hackers-breach-dozens-of.html (Accessed: 24 October 2025).

Trellix Advanced Research Center. (2025). The Cyberthreat Report: October 2025. Available at: https://www.trellix.com/advanced-research-center/threat-reports/october-2025/ (Accessed: 24 October 2025).

Trellix. (2025). Trellix Reports Nation-State Espionage and AI-Driven Financial Attacks Converging. Available at: https://industrialcyber.co/reports/trellix-reports-nation-state-espionage-and-ai-driven-financial-attacks-converging-as-industrial-sector-most-targeted/ (Accessed: 24 October 2025).

VentureBeat. (2025). When Your AI Browser Becomes Your Enemy: The Comet Security Disaster. Available at: https://venturebeat.com/ai/when-your-ai-browser-becomes-your-enemy-the-comet-security-disaster (Accessed: 24 October 2025).

Wikipedia. (2025). ChatGPT Atlas. Available at: https://en.wikipedia.org/wiki/ChatGPT_Atlas (Accessed: 24 October 2025).

Wiz Security. (2025). s1ngularity: AI Supply Chain Attack. Available at: https://www.traxtech.com/ai-in-supply-chain/ai-powered-supply-chain-attacks-compromise-hundreds-of-developer-packages (Accessed: 24 October 2025).

Yale Medicine. (2025). Bridging Biology and AI: Yale and Google's Collaborative Breakthrough in Single-Cell Analysis. Available at: https://medicine.yale.edu/news-article/bridging-biology-and-ai-yale-and-googles-collaborative-breakthrough-in-single-cell-analysis/ (Accessed: 24 October 2025).

Social Engineering: AI Supercharges Human Manipulation